This post first appeared on the Policy & Politics blog. It summarizes an article published in Policy & Politics.

Could policy theories help to understand and facilitate the pursuit of equity (or reduction of unfair inequalities)?

We are producing a series of literature reviews to help answer that question, beginning with the study of equity policy and policymaking in health, education, and gender research.

Each field has a broadly similar focus. Most equity researchers challenge the ‘neoliberal’ approaches to policy that favour low state action in favour of individual responsibility and market forces. They seek ‘social justice’ approaches, favouring far greater state intervention to address the social and economic causes of unfair inequalities, via redistributive or regulatory measures. They seek policymaking reforms to reflect the fact that most determinants of inequalities are not contained to one policy sector and cannot be solved in policy ‘silos’. Rather, equity policy initiatives should be mainstreamed via collaboration across (and outside of) government. Each field also projects a profound sense of disenchantment with limited progress, including a tendency to describe a too-large gap between their aspirations and actual policy outcomes. They describe high certainty about what needs to happen, but low confidence that equity advocates have the means to achieve it (or to persuade powerful politicians to change course).

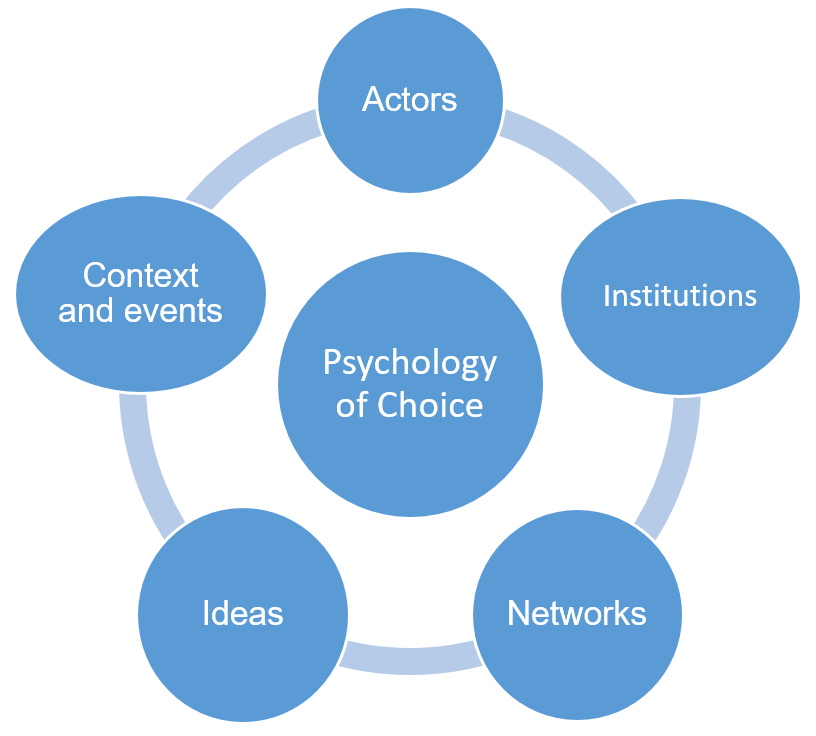

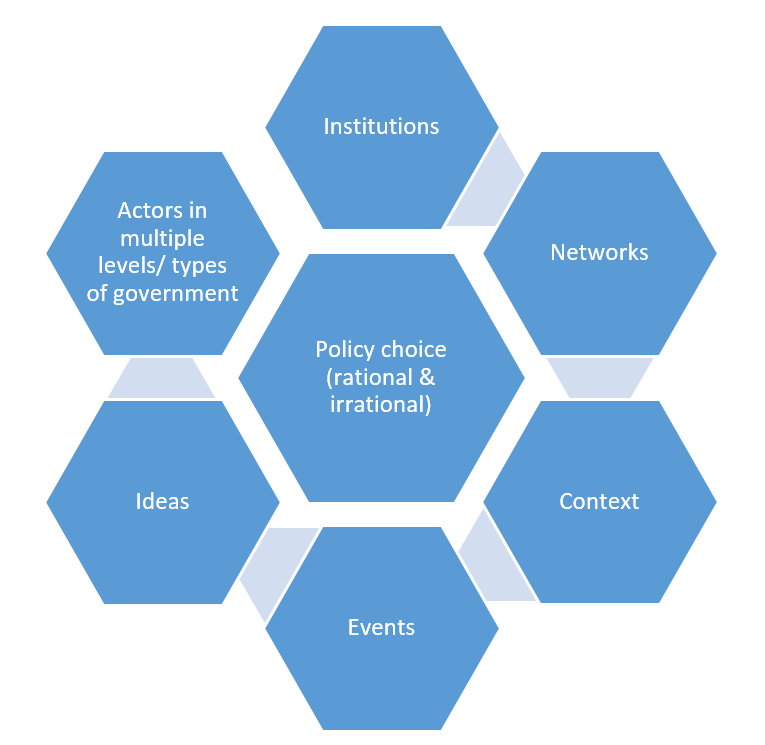

Policy theories could offer some practical insights for equity research, but not always offer the lessons that some advocates seek. In particular, health equity researchers seek to translate insights on policy processes into a playbook for action, such as to frame policy problems to generate more attention to inequalities, secure high-level commitment to radical change, and improve the coherence of cross-cutting policy measures. Yet, policy theories are more likely to identify the dominance of unhelpful policy frames, the rarity of radical change, and the strong rationale for uncoordinated policymaking across a large number of venues. Rather than fostering technical fixes with a playbook, they encourage more engagement with the inescapable dilemmas and trade-offs inherent to policy choice. This focus on contestation (such as when defining and addressing policy problems) is more of a feature of education and gender equity research.

While we ask what policy theories have to offer other disciplines, in fact the most useful lessons emerge from cross-disciplinary insights. They highlight two very different approaches to transformational political change. One offers the attractive but misleading option of radical change through non-radical action, by mainstreaming equity initiatives into current arrangements and using a toolbox to make continuous progress. Yet, each review highlights a tendency for radical aims to be co-opted and often used to bolster the rules and practices that protect the status quo. The other offers radical change through overtly political action, fostering continuous contestation to keep the issue high on the policy agenda and challenge co-option. There is no clear step-by-step playbook for this option, since political action in complex policymaking systems is necessarily uncertain and often unrewarding. Still, insights from policy theories and equity research shows that grappling with these challenges is inescapable.

Ultimately, we conclude that advocates of profound social transformation are wasting each other’s time if they seek short-cuts and technical fixes to enduring political problems. Supporters of policy equity should be cautious about any attempt to turn a transformational political project into a technical process containing a ‘toolbox’ or ‘playbook’.

You can read the original research in Policy & Politics:

Paul Cairney, Emily St.Denny, Sean Kippin, and Heather Mitchell (2022) ‘Lessons from policy theories for the pursuit of equity in health, education, and gender policy’, Policy and Politics https://doi.org/10.1332/030557321X16487239616498

This article is an output of the IMAJINE project, which focuses on addressing inequalities across Europe.