That question was the title of an op-ed that I wrote for the Scotsman on 11 July 2000 about compensation for people with haemophilia infected by hepatitis-C through blood products in the NHS. The answer turned out to be decades, largely because the UK government has always resisted the idea of compensation for treatment by the NHS, but with exceptions in relation to HIV or with reference to wider financial support by the state.

Currently high attention in 2024 relates to the strong likelihood of a new UK government announcement on meaningful compensation, prompted largely by the work of the Infected Blood Inquiry . For a summary of key developments, see What is the infected blood scandal and will victims get compensation?

Below, I reproduce that Scotsman op-ed to provide background on UK government resistance to compensation, then link to some resources on how the issue played out in Scotland in the early years of devolution. In short, it became an intergovernmental relations issue when (1) there was pressure on the Scottish Government (then ‘Executive’) to respond, but (2) the UK government resisted successfully the idea that the Scottish Government could go its own way.

‘How long must these sufferers wait for the compensation they deserve?’

“SCOTTISH haemophiliacs are eagerly awaiting the results of a government investigation into the issue of hepatitis-C infection through blood products. However, this is an issue which should concern us all, not only because of the extent of suffering this type of infection causes, but also because it strikes at the heart of the whole question of compensation within the NHS.

It is also fitting that this issue should raise its head during the annual international AIDS conference, since – at least politically – the similarities between hep-C and HIV infection are uncanny. So how do we explain why HIV -infected haemophiliacs were compensated over ten years ago and yet haemophiliacs infected by hepatitis-C have had to wait until now even to hear if they have a case?

HIV and hepatitis-C are both particularly serious infections. The former – unless we believe Professor Duesberg – causes AIDS and diminishes the body’s ability to overcome subsequent infections, however slight. The latter, in the long term, causes chronic liver disease and cirrhosis. Either infection combined with haemophilia causes devastation among sufferers, their families and their friends. So why does one particular sufferer receive compensation and another doesn’t?

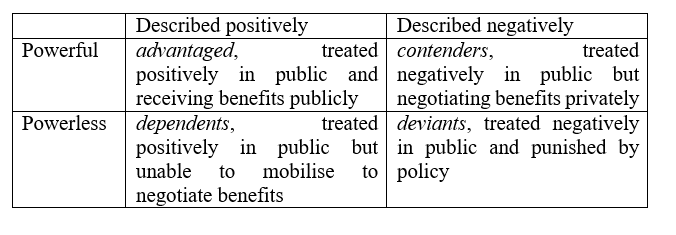

To put it bluntly, the main reason may well be that hepatitis-C is far less likely to capture the imagination of the public, Parliament or the government of the day. Hepatitis-C, unlike HIV, is only transmitted through blood and so its incidence within the general public is much less likely. Rather, on the whole it will only affect haemophiliacs and intravenous drug users. It is associated with minorities and/or deviance. It is unlikely to be able to maintain attention for any particular length of time. This is the key to the difference – HIV caught the public’s imagination because it was, and still is, presented as an infection which did or does not discriminate. It is transmitted sexually; it is transmitted through blood; it is transmitted from mother to baby; for a while it was thought that it might even be transmitted through saliva. It therefore affects us all and, albeit to a lesser and lesser extent, HIV continues to grab the headlines.

It is this crucial difference which informs much of the process of compensation. In both cases successive British (and now Scottish) governments attempted to deny responsibility for the compensation of those infected through blood products. However, this was largely unsuccessful with HIV because the issue just did not go away.

When, in the early Eighties, ministers denied the links between blood products and HIV, newspapers were filled with scientific reports which suggested otherwise. When ministers tried to delegate responsibility for HIV infections to haemophiliac specialists or health authorities, Parliament asserted its right to hold them to account. When the government accepted both the links and the responsibility, but not the need for compensation, the continuous public reaction forced a U-turn in government policy. And finally when the government accepted the need to compensate, but only those haemophiliacs who constituted a “special case”, it was again defeated by the threat of legal action supported by media, British Medical Association and MPs.

But is this likely to happen in the case of hepatitis-C? While those affected may take hope from the fact that the Scottish executive is finally taking their plight seriously, there is still one major obstacle to the granting of compensation – precedent. The most striking aspect of HIV in this regard was that the government could not afford to grant “no-fault” compensation to anyone suffering at the hands of the NHS for fear of opening the floodgates for a succession of similar claims. So, when granting a “trust fund” to HIV -infected haemophiliacs, it argued that these were “wholly exceptional circumstances”, with the implication that such compensation (although it was never termed as such) would never be repeated.

On the other hand, there is hope to be taken from this process, not least because since then the government has argued that those non-haemophiliacs infected with HIV through blood products were “also a very special case.” It seems, then, that to be successful a group just has to agree that what it is receiving is an exceptional compassionate payment rather than “compensation”.

More seriously, whether the government likes it or not, it has already set the precedent for haemophiliacs, when it recognised in 1990 that theirs were “wholly exceptional circumstances” and that haemophilia combined with another serious condition merited compensation, however this is phrased.

The government therefore has a responsibility to treat hepatitis-C sufferers in the same way as it treated those infected with HIV, irrespective of the findings of its investigation”

Attention in the Scottish Parliament (2001)

For example, the Scottish Parliament Health and Social Care Committee report in 2001 argued that sufferers should receive financial support, but largely rejected the idea of compensation:

‘100. Should this assistance that we advocate be described as compensation? “Compensation” implies negligence or fault, and on the (admittedly limited) basis of the evidence we considered, we do not think that this has been established. In the end, what matters most, in our view, is not what this assistance is called. What does matter is that it makes a clear, practical difference, and that it is delivered promptly. We would like to see a scheme established within twelve months’.

Compensation as an issue of intergovernmental relations (2003-)

By early 2003, the issue became an IGR issue. Barry Winetrobe’s report in February 2003 noted that:

“9.3 Hepatitis C referral to the JCPC

It has been reported in late January that differences in legal advice to the UK Government and the Scottish Executive over the latter’s proposal to make ex gratia payments to Hepatitis C sufferers, whose condition was caused by contaminated blood, may have to be resolved ultimately by the Judicial Committee of the Privy Council. Two difficulties appear to be relevant, one of which (similar to earlier arguments over free personal care) involves possible clawback through the (reserved) social security system of some of the payments made by the Executive. The other relates to the more general question of whether the Executive actually has the power to make such payments. This appears to be a reference to Head F (social security) of the list of reserved matters in schedule 5 of the Scotland Act, which, in its interpretation provisions, may exclude such payments from the Executive’s devolved competence or the Parliament’s legislative competence”

Cairney (2006: 433) relates compensation to disputes between the UK and Scottish governments:

“This imbalance of power is apparent when disputes rise to the surface. The most high profile policy of the first Scottish Parliament session (1999–2003) was the decision in Scotland to depart from the UK line and implement the recommendations of the Sutherland Report on ‘free’ personal care for the elderly. … if the UK government had been sympathetic to the policy a solution would have been found, but general Whitehall indifference to Scotland had turned to specific hostility (particularly since UK ministers failed to persuade their Scottish counterparts to maintain a UK line). This approach was also taken with the issue of Hepatitis C compensation in Scotland, with the Department of Work and Pensions threatening to reduce benefit payments to those in receipt of Scottish Executive compensation (Lodge, 2003 reproduced below). This case was taken further, with Whitehall delaying Scottish payments on the basis of competence (health devolved, but compensation for injury and illness reserved) until it came up with a UK-wide scheme to be implemented in Scotland (Anon., 2004a)”

Cairney (2011: 98-9) notes:

“Hepatitis C became a cause of relative tension, with the UK Government apparently willing to challenge the Scottish Executive’s right to provide compensation (payments related to injury and illness are reserved), until it came up with a UK-wide compensation scheme (with which Scottish ministers were less happy) (Winetrobe, February 2003: 39–40; February 2004: 42; May 2004: Cairney, 2006: 433; January 2007: 83). The Scottish Executive and UK Government also faced calls for a public inquiry into Hep C in 2006 (Cairney, September 2006: 75). The Scottish Government oversaw its own inquiry on Hep C, partly to put pressure on the UK Government to follow suit (Cairney, May 2008: 87; May 2009: 58)”

The ‘view from the centre’

May 2003: Guy Lodge’s report states that:

“The dispute between the UK government and the Scottish Executive, over the decision by the Executive to provide compensation to anyone who contracted Hepatitis C on the NHS in the 1970s and 1980s as a result of contaminated blood, has continued this quarter. The Scottish Health Minister, Malcolm Chisholm, announced proposals for ex gratia payments in January 2003, but has had to concede that no payments will be made until the Scottish Executive resolves the issue with Westminster. The dispute centres on whether or not the Department for Work and Pensions will try and ‘clawback’ the money used by the Executive in compensation through the social security system. There is also a debate over whether the Executive actually has the power to make such payments.

In what many in Scotland have interpreted as a snub to the Scottish Parliament, Andrew Smith, the Secretary of State for Work and Pensions, has refused to appear before the Health Committee, which had invited him to give evidence on the issue.

The SNP have been keen to raise the issue at Westminster. On 21 May, Annabelle Ewing quizzed the Prime Minister on why no compensation had been made during questions to the Prime Minister.

Annabelle Ewing (SNP – Perth): The Prime Minister will be aware that the Scottish Parliament agreed at the beginning of this year to pay compensation to hepatitis C sufferers in Scotland who contracted the disease through contaminated NHS blood products. However, not a penny piece has yet been paid, as a result of dithering by Westminster over jurisdiction. Can I inject a sense of urgency into the debate and ask the Prime Minister to confirm today that Westminster will not frustrate the will of the Scottish Parliament to pay compensation under exemption from the benefits clawback regulations? Surely the Prime Minister would agree that the people involved have already waited far too long for justice.

The Prime Minister: I am aware of the Scottish Executive’s decision to pay compensation to hepatitis C sufferers. I am not aware of the other particular problem to which the hon. Lady has just drawn attention. I shall look into it, and write to her about it.

Annabelle Ewing also raised the issue on 20 May at Scotland Office questions in which Liddell suggests that a decision will be made after the elections in Scotland.

Annabelle Ewing : Surely the key issue is whether she will fight for the right of the Scottish Parliament to pay compensation and for a 100 per cent exemption from the benefit clawback rules. If she will not do that, will she explain to hepatitis C sufferers in Scotland why on earth Scottish taxpayers are paying £7 million for the running costs of her office?

Mrs. Liddell: There are serious legal and policy-based issues in relation to hepatitis C. There have been extensive discussions between the Scottish Executive and the Department for Work and Pensions, not least on whether payments should be taken into account as capital or income when someone claims income-related benefits. Those discussions could not continue because of the Scottish Parliament elections. As soon as the Minister for Health and Community Care is in place in the Scottish Parliament, those discussions will continue”